Technology

What are AI Semiconductors? Power Problems in Data Centers and Game-Changing Technology

What are AI Semiconductors? How They are Different from Traditional Chips

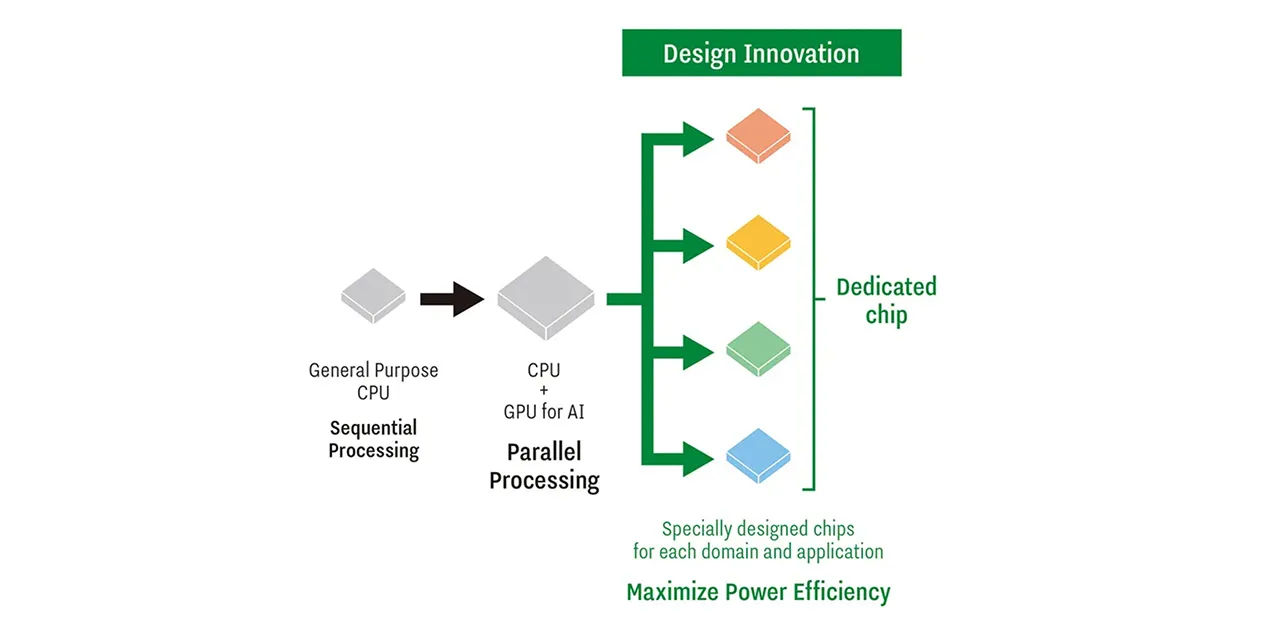

AI semiconductors are computer chips specially designed to handle the heavy calculations that AI algorithms require. Unlike general-purpose chips like central processing units (CPUs), which are built to perform many different tasks, the biggest feature of AI chips is parallel processing which allows them to process vast amounts of data much faster. Since AI training and inference involve massive amounts of math and data, regular chips often struggle to keep up in terms of speed and efficient energy use.

AI semiconductors come in several types, including graphics processing units (GPUs), which were originally developed for image processing, and more specialized chips such as field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs). These chips are optimized for performing AI algorithms and are much faster than traditional CPUs.

One popular chip architecture today combines a GPU with high bandwidth memory (HBM). Traditionally, GPUs had memory installed outside the chip, but HBM stacks multiple layers of memory inside the GPU package, connecting them closely to the GPU using a special base called an interposer. This structure enables data to move faster through wider paths, which helps the GPU perform at its best.

AI semiconductors can also be scaled and customized for different purposes. By adding special components called accelerators, they can handle advanced AI tasks more efficiently than regular chips. With these strengths, AI semiconductors are now being utilized in many fast-growing fields like generative AI, self-driving cars and edge devices.

| Feature | AI Semiconductor Chips (GPU, FPGA, ASIC, etc.) | Conventional Chips (e.g., CPU) |

|---|---|---|

| Main Purpose | Accelerate AI training and inference | Handle a wide range of general-purpose calculations |

| Processing Method | Large-scale parallel processing with up to tens of thousands of cores | Mostly sequential processing with a small number of cores |

| AI Performance/Power Efficiency | Faster and more energy-efficient for the same tasks | AI tasks are inefficient and consume a lot of power |

| Customizability | Circuits are optimized for specific use, with minimal wasted logic | Limited optimization for AI tasks |

| Typical Applications | Smartphone AI features, voice assistants, autonomous driving, cloud AI | PCs, home appliances, game consoles |

Data Centers are Reaching Their Limit

As AI becomes more common, the amount of data to be processed is exploding. To keep up, we need more powerful computers that can handle such data. That is why today's large-scale data centers need more and more semiconductors, especially high-performance chips like GPUs.

AI processing is usually divided into two parts: training and inference. Training is when the AI learns by using huge amounts of data, which requires a lot of computing power, almost like using a supercomputer, and it also consumes a ton of electricity. On the other hand, inference is when the AI uses what it learned as a trained model to make real-world predictions or decisions.

According to an April 2025 report from the International Energy Agency (IEA), entitled Energy and AI, electricity use in data centers worldwide is expected to double by 2030, reaching about 945 terawatt-hours. However—and shocking, but not surprising—AI-only data centers could use over four times more power by 2030 than they do today, more than all the electricity currently used in Japan. To handle this massive power demand, it is urgent to bring in more renewable energy and keep the power supply stable.

Running AI models for training and inference uses massive amounts of electricity. As AI becomes more complex, data centers relying on GPUs face urgent concerns, such as high power use and cooling costs. Just one high-performance GPU server uses several kilowatts of electricity and produces a lot of heat, which requires efficient cooling. But introducing cooling systems and smart rack setups costs a lot too. There is only so much computing power available. Therefore, managing electricity use, cooling costs and how computing resources are shared will be some of the biggest challenges for future data centers.

AI in Edge Devices - The Rise of Edge AI

AI does not just run on cloud servers in giant data centers. AI is also being used more and more in edge devices, such as smartphones, smart home appliances, cars, smart glasses, AR/VR headsets and even portable translators.

An AI accelerator plays a significant role in these devices. AI accelerators can handle tasks like image analysis and voice recognition directly inside the device, quickly with lower power. They excel at real-time responses and better security, while dramatically reducing communication costs. In cars, AI accelerators help analyze video from cameras to detect problems or support self-driving features. In smart glasses or AR/VR devices, they track where you are looking and layer helpful information onto your view. In portable translators, they make real-time speech translation possible on the spot. Thanks to the advancement of AI accelerators, edge devices are becoming smarter and more independent. They can now perform powerful AI functions without relying on cloud servers, making them useful in everything from industry to everyday life.

The Potential of AI Accelerators

GPUs were originally made for image processing, and they are really good at handling AI tasks. However, they use a lot of electricity and are expensive, so they are not always the best fit for edge devices.

This is where AI accelerators come in. They are made just for high-speed AI computations consuming low electricity and specialize in specific AI tasks (deep learning, neural network processing, etc.). Therefore, they can be built with simpler, more efficient circuits, compared to GPUs and CPUs, which means they are cheaper, smaller, and use much less power--ideal for edge devices and built-in systems. With AI accelerators, mobile and compact devices like smartphones, IoT devices, appliances and even cars can run AI in real time at low power consumption.

Many of these accelerators use specialized parts such as FPGAs and ASICs, which are custom-designed to perform necessary AI computations quickly and efficiently. AI accelerators literally accelerate the growth of edge AI within a wide variety of AI applications, checking all the right boxes: low power use, low cost and small size.

How Rapidus and Tenstorrent are Shaping the Future

In February 2024, Rapidus teamed up with Tenstorrent—a company dedicated to creating affordable, open computing platforms for AI, machine learning and HPC—to build a next-generation AI accelerator for edge devices, using 2-nanometer (nm) logic.

This project, promoted under the framework of "Development of Edge AI Accelerators Using 2nm-Generation Semiconductor Technology," is part of a big national research effort supported by Japan's New Energy and Industrial Technology Development Organization (NEDO); and the "Post-5G Information and Communication System Infrastructure Enhancement R&D Project," which was adopted by the Leading-edge Semiconductor Technology Center (LSTC), a research institute aiming at developing technologies for mass producing next-generation semiconductors. Tenstorrent brings top-tier technology to the table: leading-edge RISC-V CPUs and chiplet intellectual property (IP), both crucial for building high-performance, compact AI chips. Linking these strengths with Rapidus' manufacturing platform can optimize both design and mass production processes.

Rapidus, on the other hand, specializes in manufacturing these advanced chips, focused on using disruptive, innovative approaches to facilitating the design and production of semiconductors for edge AI.

Edge AI runs AI-based data processing and decision-making directly on a device (edge device, like smartphones, IoT devices, etc.) instead of sending everything to the cloud. This lets the device make decisions instantly, with no delay. Since only the essential data is sent to the cloud, it saves on communication costs. Plus, because sensitive information stays on the device, it is also safer and more secure.Edge AI is especially useful in things like self-driving cars and industrial robots, where fast and reliable decision-making is important.

A CPU is like the command center of a computer handling all kinds of tasks and controls. CPUs usually have just a few very powerful cores that process tasks quickly one after another, making them great for handling complex tasks and conditional branches. CPUs are universal and their well-known makers include Intel and AMD.

A GPU is a semiconductor chip specialized in handling images and 3D graphics. The biggest strength is that it has thousands of small cores working at the same time, which is called parallel processing. This enables GPUs to process a vast amount of data simultaneously.That makes GPUs perfect for AI tasks like training neural networks and running trained models for inference, which involve tons of math, more efficiently than CPUs. Currently, GPUs are key to powering things like generative AI and deep learning, while companies such as NVIDIA are leading the way in making these advanced chips.

- #Semiconductor

- #Design

- #AI