Technology

What Is Edge AI? How the Latest AI Trends Are Tied Closely to Semiconductors

AI is already part of everyday life—unlocking smartphones with facial recognition, identifying voices on smart speakers and helping robot vacuums clean more efficiently. Importantly, not all of these tasks are handled in massive data centers. In recent years, edge AI—where devices such as smartphones and home appliances run AI processing on their own—has been spreading rapidly.

So why can AI workloads that once required data centers now run on palm-sized devices? The answer lies in advances in semiconductors. The evolution of AI models and semiconductor technology has progressed hand in hand, reinforcing each other like two gears in the same machine. And for edge AI in particular, high performance and low power consumption are both essential.

In this article, we explain what edge AI is, how it differs from cloud AI and what benefits it brings, along with the semiconductor innovations that make it possible.

What Is Edge AI? How It Differs from Cloud AI—and Why It Matters

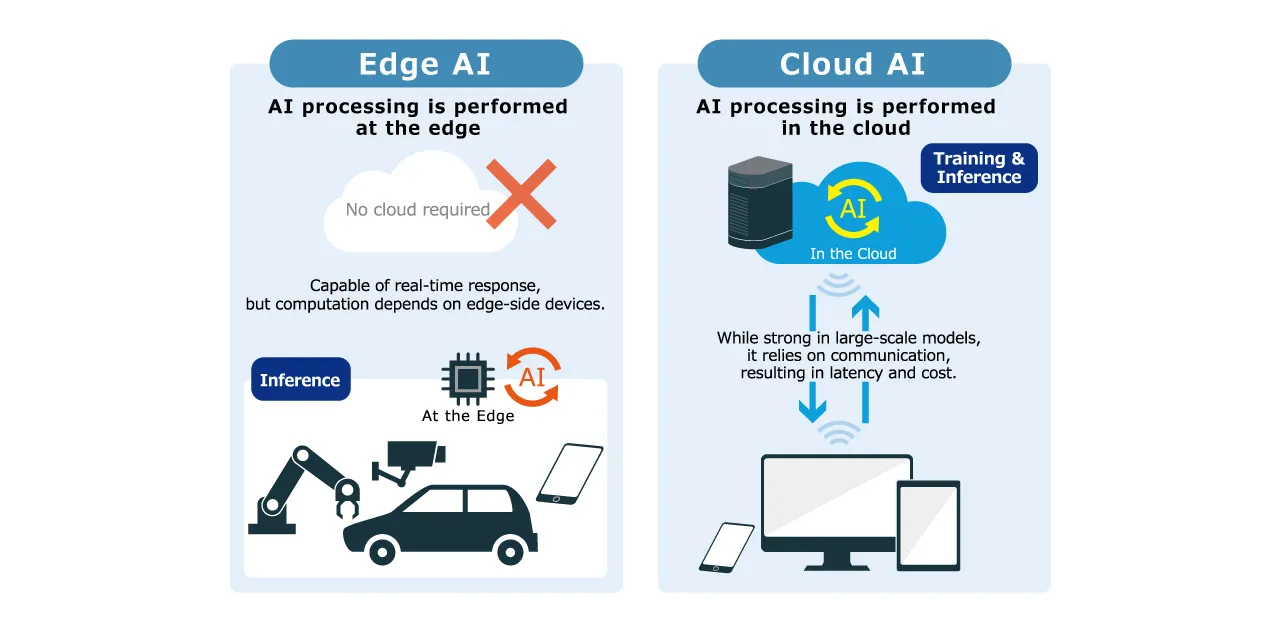

Edge AI refers to technology that completes AI processing on the device itself, where the data is generated—for example, on a smartphone or other end device. With the more traditional cloud AI approach, data collected on a device is sent over the internet to remote data centers, where powerful servers analyze it and make decisions. With edge AI, by contrast, data does not need to leave the device: the device runs AI processing locally.

Edge AI

Typical examples of edge AI include smartphone facial recognition, voice recognition on smart speakers, object detection in AI-enabled cameras and health data analysis on smartwatches. Because processing happens on-device, edge AI can deliver real-time responses and drastically reduce latency compared with sending data over the network. It can also strengthen privacy and security, since sensitive data can stay on the device. In many cases, edge AI reduces network bandwidth usage and communication costs as well. Another major advantage is resilience: edge AI can keep working even when connectivity is limited—or completely offline.

At the same time, edge devices have limited computing resources, so there are constraints on the size and complexity of AI models they can handle. In particular, training large AI models requires enormous compute and is rarely done on edge devices; it is typically performed in the cloud. In practice, a common division of labor is:

- Edge AI: primarily runs inference (using trained models to produce results)

- Cloud AI: handles large-scale training and more compute-intensive processing

That balance is shifting, however. Thanks to advances in model optimization and the evolution of dedicated AI hardware (such as NPUs and TPUs), sophisticated inference workloads that once required cloud-scale resources are increasingly feasible on edge devices. As a result, the range of tasks edge AI devices can perform continues to expand.

Cloud AI

Cloud AI runs AI workloads on powerful server clusters in remote data centers, using high-performance GPUs and dedicated AI accelerators. Conversational generative AI services based on large language models (LLMs), large-scale image analysis and translation services are typical examples.

Because cloud environments can draw on massive compute resources, they can handle extremely complex and very large models. The trade-offs are also well-known cloud AI can introduce network latency, requires ongoing data transmission and raises concerns around privacy and security during transfer. Cloud infrastructure also consumes large amounts of electricity—including cooling—so environmental impact and energy efficiency have become major issues. To address this, data centers are accelerating improvements such as more efficient cooling and broader use of renewable energy.

| Feature | Cloud AI | Edge AI |

|---|---|---|

| Processing Location | Remote data center (cloud server) | Device (smartphone, PC, home appliances, etc.) |

| Processing Capability | Very high (large models and complex processing possible) | Limited (lightweight models and small-scale processing primarily) |

| Processing Speed | Depends on communication environment, latency prone | Real-time processing possible, very little latency |

| Communication Dependency | High (always requires internet connection) | Low (can operate offline) |

| Communication Cost | High (large data communication occurs) | Low (processes data on device, reduces communication volume) |

| AI Model Updates | Centrally managed and updated on cloud side | Distribution and update needed for each device |

| Power Consumption | Consumes large amounts of power (including data center cooling, etc.) | Power-efficient design progressing, consumption suppressed at device level |

| Main Uses |

• Large language model training • Large-scale data analysis • AI functions on cloud services |

• Smartphone facial recognition • Smart speaker voice recognition • Autonomous vehicle decisions • Industrial robot real-time control |

| Advantages |

• Scalability (high expandability) • No server preparation or management cost • Supports complex, large-scale AI models |

• Low-latency processing • High security • Communication cost reduction • Offline operation possible |

| Disadvantages |

• Communication latency occurs • Communication costs • Security risks |

• Depends on device computing resources • Unsuitable for large-scale AI model training |

| Required Semiconductors |

• High-performance GPUs and AI-dedicated semiconductors (ASIC) • High-speed memory, large-capacity storage |

• Compact, power-efficient NPUs and SoCs • Low-power memory (LPDDR) • Various sensor semiconductors |

Semiconductor Technologies Powering Edge AI

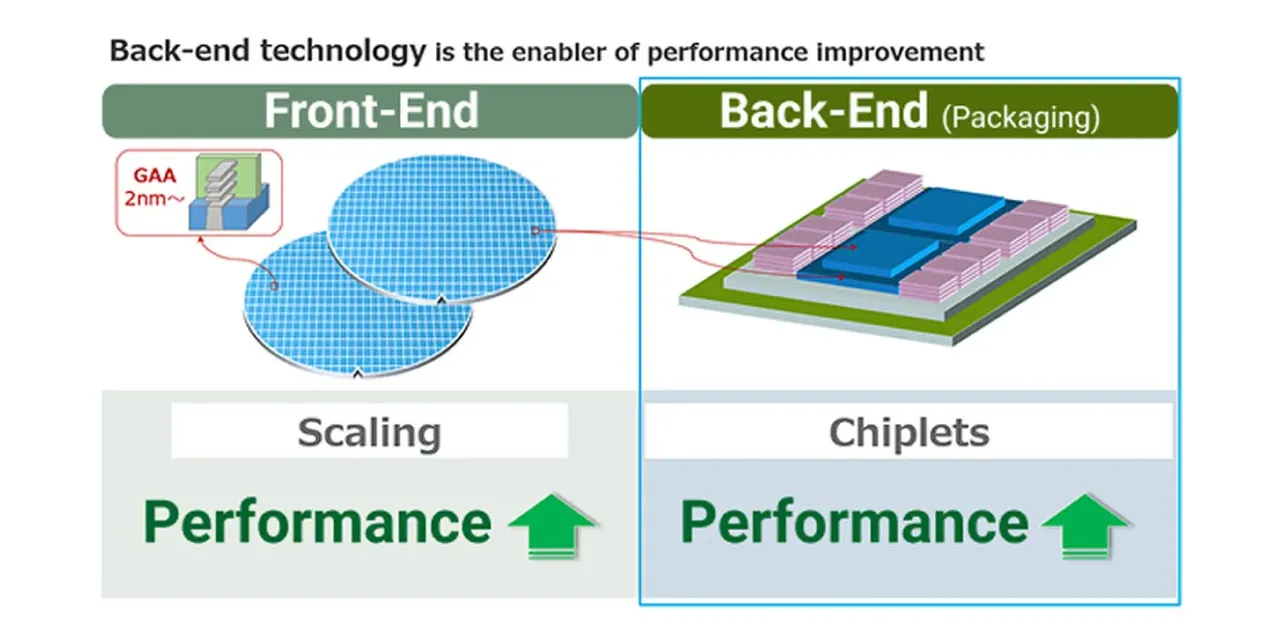

Edge AI has grown alongside ongoing breakthroughs in semiconductor technology. Running advanced AI on small, power-limited devices requires progress across multiple layers—logic chips, memory, sensors, and packaging. Let's look at the key building blocks.

Logic Chips: Smaller, Faster, More Efficient

Logic semiconductors are the brain of edge AI devices, and they continue to improve in both miniaturization and energy efficiency. As planar transistors approached scaling limits around the 20nm generation, FinFET structures became widely adopted. In more advanced nodes, gate-all-around (GAA) transistors have been introduced, with nanosheet structures helping improve performance while reducing power consumption.

Rapidus is developing a 2nm process with GAA technology and is targeting mass production in 2027. It has also announced a successful prototype in 2025, helping accelerate the path toward high-performance chips suited for edge AI. Since edge devices mainly run inference, power-efficient components such as NPUs and DSPs are often integrated into SoCs, enabling features like real-time subject detection on smartphones.

Rapidus is also collaborating with Tenstorrent in Canada to co-develop edge AI accelerators using 2nm technology. By combining Tenstorrent's RISC-V cores with Rapidus' manufacturing technology, the effort aims to support advanced inference workloads, including those used for generative AI.

Memory: Feeding Data at High Speed

AI performance depends not only on compute but also on how quickly data can be delivered to the processor. For edge devices—where power is limited—low-power, high-speed memory is especially important. LPDDR plays a key role here. The latest LPDDR5X operates at over 8GHz and enables extremely high transfer rates while maintaining energy efficiency, making it well suited for smartphones, AR/VR devices and automotive AI.

Storage speed matters too, since AI features often require fast loading and updating of models. NAND flash is widely used, and standards such as UFS and NVMe can accelerate model loading and write performance. In addition, uMCP packages that integrate DRAM (LPDDR) and flash (UFS) support smaller form factors and improved performance. Together, advances in both volatile and non-volatile memory help edge AI deliver faster responses.

Sensors: AI's Eyes and Ears

For edge AI to understand the real world, it needs data from sensors. Cameras act as eyes, microphones as ears, and accelerometers and gyroscopes provide motion and orientation signals. Many smartphones and IoT devices incorporate CMOS image sensors, MEMS microphones and environmental sensors—each powered by advanced semiconductor design.

CMOS sensors convert light into electrical signals at high speed. More recently, smart image sensors with on-sensor processing—including AI-related functions—have emerged, enabling tasks such as object recognition at the moment an image is captured. MEMS sensors use microstructures to translate physical changes such as acceleration and air pressure into electrical signals.

What makes edge AI truly valuable is the ability to process these signals immediately. With higher bus bandwidth and faster on-device processing, systems can analyze large volumes of sensor data in real time—without sending raw data to the cloud. This enables functions such as local person and object recognition from cameras and on-device speaker or command detection from microphones. By tightly integrating sensors and AI chips, edge AI can deliver real-time intelligence while also strengthening privacy.

Packaging: Miniaturization and Heat Management

Edge AI depends not only on chips themselves but also on advances in packaging. Packaging protects chips and connects them to the outside world—but it also influences size, performance and thermal behavior.

As devices have become thinner and smaller, high-density packaging has advanced significantly. In smartphones, Package on Package—stacking memory on top of logic—has become common. 3D stacking can connect multiple chips vertically, reducing signal delay while saving board space. However, higher density also increases heat, so thermal solutions such as heat spreaders and cooling structures are increasingly important.

Another major trend is chiplets, which combine multiple smaller dies into one package. Chiplets can improve yield and cost efficiency by allowing each function to be manufactured in its optimal process. Over time, chiplet-based designs may also enable partial upgrades or reconfiguration. Packaging CPUs, NPUs and memory as chiplets can help balance high performance with energy efficiency.

Rapidus is placing emphasis on packaging as well, promoting not only front-end manufacturing but also advanced integration of back-end processes. In its joint work with Tenstorrent, the companies are also pursuing chiplet package design to enable faster iteration from design to implementation.

The Future Edge AI Can Create

Edge AI—powered by semiconductor progress—has the potential to make life more convenient, safer and more personalized.

Smarter, More Autonomous Devices

Everyday devices will increasingly include AI that allows them to act more independently. Next-generation smart appliances may learn routines and optimize behavior automatically. Robot vacuums and home robots can better understand their surroundings through sensors and on-device intelligence, working more smoothly and efficiently. With high-performance inference enabled by compact chips, devices in homes and offices may become more context-aware and helpful.

Safer, More Resilient Infrastructure

Edge AI can improve security and safety by enabling local detection of anomalies. For example, surveillance cameras equipped with edge AI can flag unusual events on-site, without transmitting sensitive video streams over networks. This can support crime prevention and disaster response while still respecting privacy.

Vehicles will also benefit as on-board processors interpret complex surroundings in real time, supporting safer and more advanced autonomous driving. Over time, embedding intelligence into infrastructure such as traffic lights and roads could help advance smarter cities that do not rely entirely on cloud connectivity.

More Personalized Healthcare

Wearables and medical devices equipped with edge AI can continuously analyze signals such as heart rate and blood pressure, helping detect anomalies earlier and provide personalized feedback. If portable diagnostic devices become more widespread, AI can support on-site assessments even without stable internet access. This points toward a future where AI can act like an accessible, always-available health assistant.

Environment and Sustainability

Energy efficiency and CO₂ reduction will be essential as edge AI scales. The semiconductor industry is increasing efforts to reduce environmental impact through energy-saving technologies and broader adoption of renewable power. Edge AI can support sustainability not only through efficient computing, but also by enabling smarter, more optimized systems across society.

Conclusion

Edge AI represents a shift toward running AI locally on devices—without relying entirely on the cloud—and it has been made possible by major advances in semiconductor technology. Today, computing capability once limited to data centers is increasingly being packed into smartphone-sized chips, enabling fast, secure and convenient AI experiences close to users.

Edge AI and cloud AI serve different strengths, and they will likely continue to evolve in complementary ways. For Edge AI in particular, high-performance and power-efficient semiconductor chips remain the key driver of innovation. As semiconductors advance, AI capabilities will continue to expand—and the value AI brings can enrich daily life. Edge AI's future will go hand in hand with semiconductor progress, and its strategic importance is set to grow even further.

- #Semiconductor

- #AI

- #Design